Reduce ChatGPT Cost by 98% with Optimized Prompt Engineering

- Aug 14, 2023

- 3 Min Read

The rise of AI is well and truly here. Many businesses are adopting ChatGPT to deliver human-like responses. Many companies, like OpenAI, AI21, CoHere, etc., are offering LLMs (Large Language Models) as services. The demand for Generative AI will only increase from here. But, all of this comes at a cost, and we mean literally. Let’s face it, using AI can become expensive in the long run. And it is natural for us to wonder if it is possible to reduce ChatGPT costs.

The short answer is yes. Before finding out how to do it, let’s understand what affects these prices.

Why the high prices?

All these LLMs are available for users at a price. Now, the issue isn’t that in order to use these LLMs you will have to spend. The issue is how much it can end up costing.

The expenses associated with using ChatGPT can accumulate fast. And especially for businesses with a large number of queries, the cost shoots up.

ChatGPT is estimated to cost over $700,000 per day to operate. Further, using GPT-4 to support customer service can cost a small business over $21,000 a month. It does not seem feasible. Therefore, we need to figure out how to reduce ChatGPT costs for it to become more accessible.

Factors affecting the high cost of LLMs

Today, we are going to see how to slash these prices. But before that, let’s figure out, why these high prices.

These models exhibit varied pricing frameworks, with fees that can vary significantly by two orders of magnitude.

The pricing of generative AI models is determined by the length of the input/prompt and the output, with costs calculated per token.

The cost of using an LLM API typically consists of three components:

- prompt cost (proportional to the length of the prompt),

- generation cost (proportional to the generation length), and

- sometimes a fixed cost per query.

How to reduce the cost of using LLMs like ChatGPT?

Even though the prices might be a bit steep, there are ways to bring them down.

Here are three strategies users can implement for cost reduction:

- Prompt adaptation,

- LLM approximation

- LLM cascade

1. Prompt Adaption

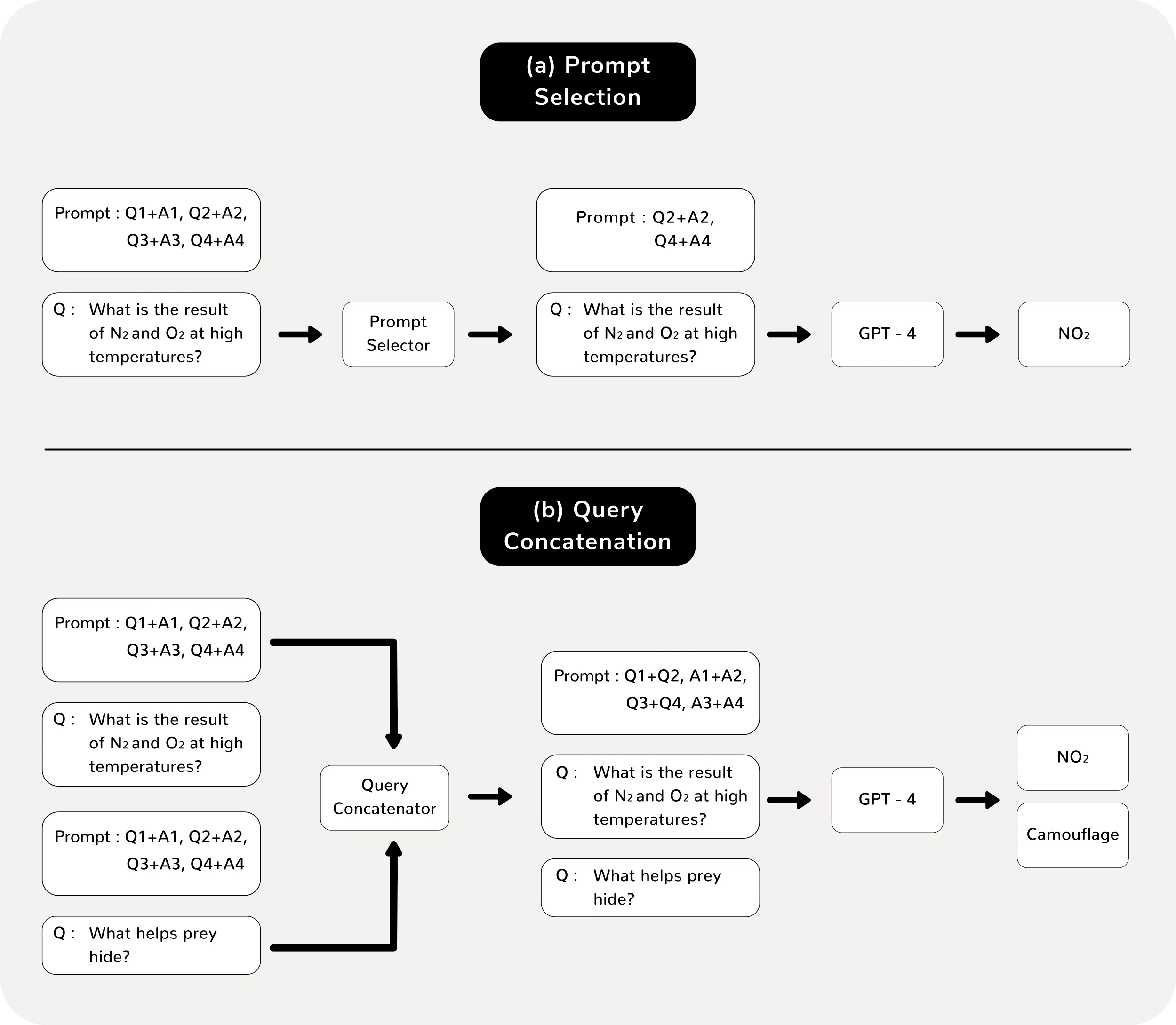

It means modifying the prompt to reduce the cost or improve the performance of the model. There are two ways to implement this.

Prompt Selection: This technique involves selecting a smaller subset of examples from the prompt instead of using a prompt that contains numerous examples. Reducing the number of examples decreases the overall size.

The challenge here is deciding which examples to keep while maintaining the desired task performance.

Query Concatenation: Here, you combine multiple queries into a single one. Furthermore, the prompt explicitly instructs the language model API to process these multiple queries. Instead of sending each query separately, the prompt is designed to handle multiple queries at once.

This approach eliminates redundant prompt processing and reduces costs since the prompt is sent only once.

2. LLM approximation

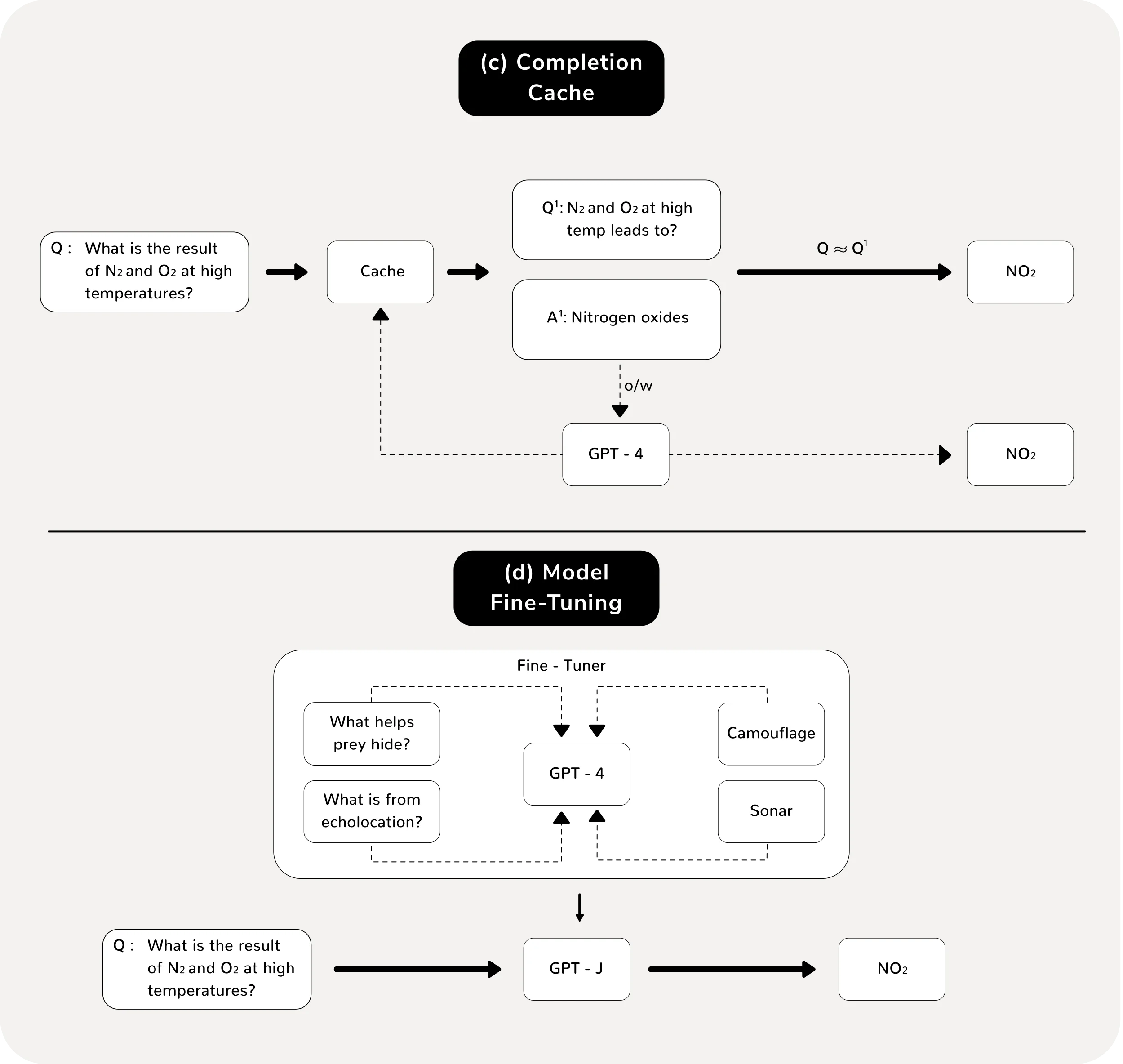

In this method, we find affordable alternatives to expensive LLM APIs. This means we will use other models and methods that will provide similar results at a lower cost.

Now, there are two ways to go about it.

Completion Cache: Imagine you have a database where you store previous queries and their corresponding LLM responses. When a new query comes in, you first check if there was a similar query before.

If yes, you retrieve the stored response instead of asking the expensive LLM API. This saves costs because you don't have to use the expensive API for every query.

Model Fine-Tuning: This method involves using a powerful and expensive LLM API to collect responses for a few queries. Then, using these collected responses, you train a smaller and more affordable AI model (e.g., GPT-3 Ada or Babbage).

This fine-tuned model can then handle new queries, providing cost savings and potentially faster response times. However, the fine-tuned model may not have the same level of knowledge as the larger LLM, so its accuracy might be slightly lower.

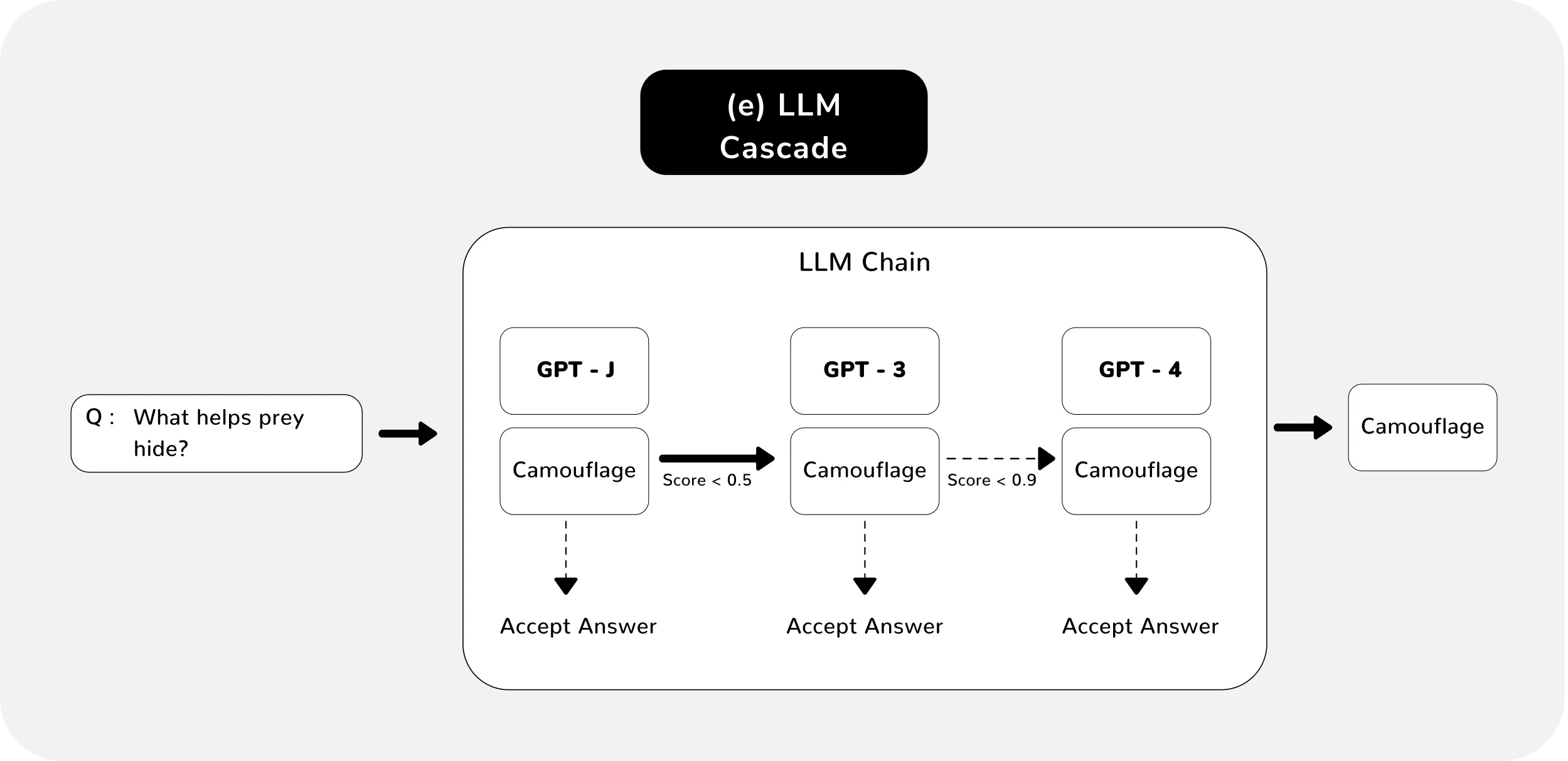

3. Simplified LLM Cascade

It involves using multiple language models (LLMs) in a sequence to generate responses to user prompts.

Here's a simplified explanation of how LLM cascade works:

- You have a list of LLMs, ranging from simple/cheap models to complex/expensive models.

- When a user enters a prompt or query, you first send it to the simplest model in the list.

- If the response from the simple model is reliable and meets your requirements, you stop there and return the response to the user. This helps reduce costs because simpler models are generally cheaper to use.

- However, if the response is not reliable or doesn't meet your requirements, you move down the cascade and query the next LLM in the list, which is slightly more complex and potentially more expensive.

- You continue this process until you receive a reliable response or exhaust all the LLMs in the list.

Reduce ChatGPT Costs with FrugalGPT

We saw the challenges for each method to reduce ChatGPT costs. Now, there is another method that can help us overcome these challenges.

The concept is to combine different strategies for a more efficient LLM cascade. The idea proposed is “joint prompt and LLM selection.” This means we use these strategies to select the smallest prompt and the most affordable LLM for satisfactory performance.

And that’s where FrugalGPT comes in.

What is FrugalGPT?

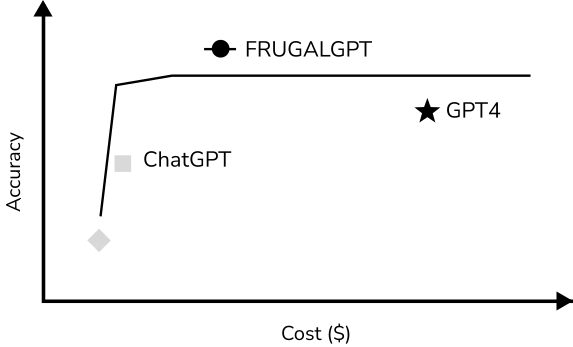

FrugalGPT is a system that aims to reduce ChatGPT costs, and also the prices for other LLMs, while maintaining or even improving their performance.

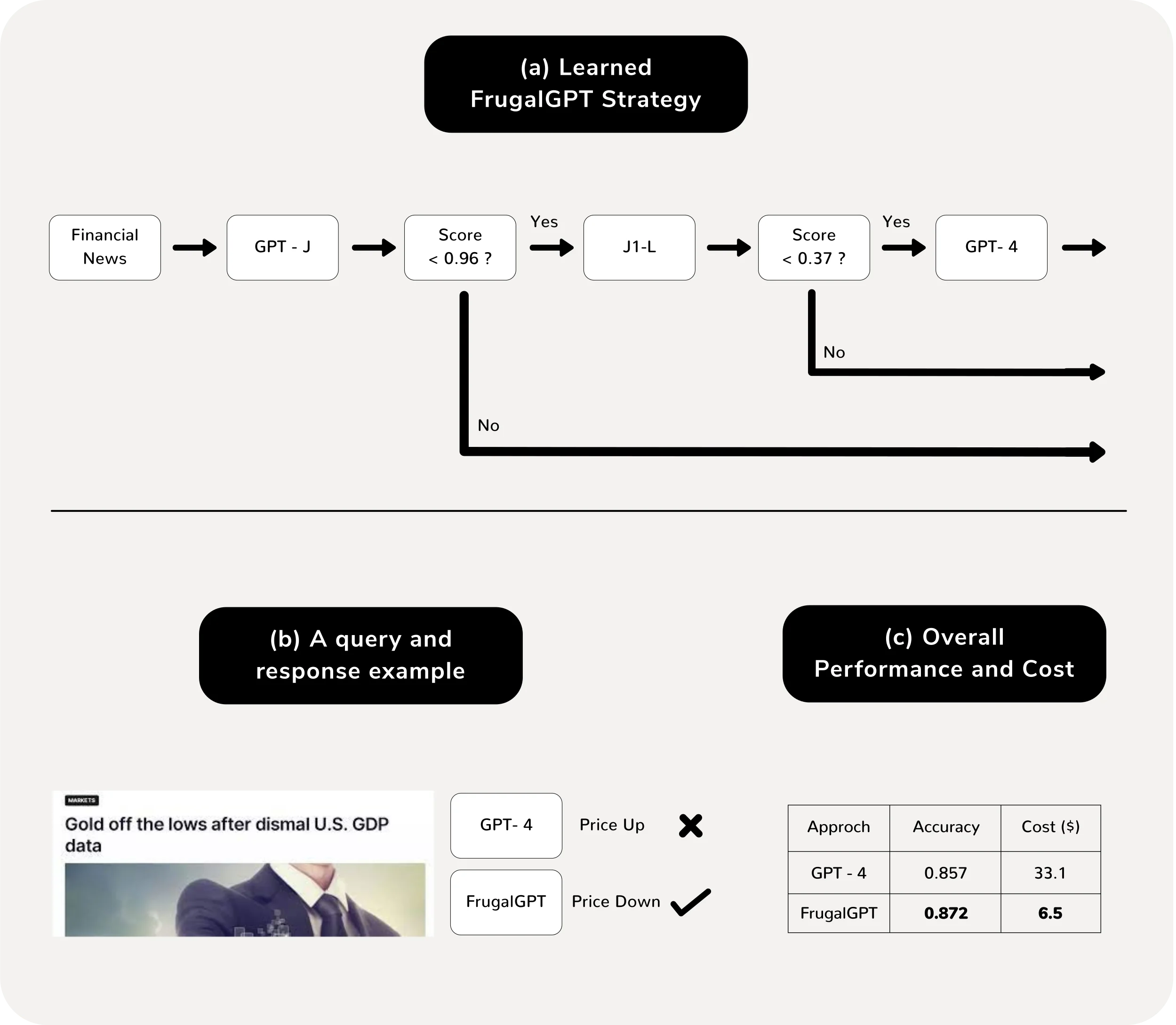

It is a simple yet flexible instantiation of LLM cascade which learns which combinations of LLMs to use for different queries.

Benefits of FrugalGPT

- It uses multiple APIs to find a balance between cost and performance. Here, users can choose a balance suited to their needs.

- It is helpful for users as it reduces the costs of using AI (which was our aim in the first place.)

- Furthermore, it can help LLM providers save energy and reduce carbon emissions.

What does the data show?

- Experiments show that FrugalGPT can match the performance of the best individual LLM (e.g. GPT-4) with up to 98% cost reduction. Furthermore, it can also improve the accuracy over GPT-4 by 4% at the same cost.

In conclusion

LLMs like ChatGPT have the potential to change the way businesses run. However, if we do not reduce ChatGPT costs, it becomes a huge obstacle. By using methods like FrugalGPT or others mentioned above, we can significantly bring the costs down while maintaining satisfactory performance. Are you ready to transform your business with AI?

Stay in the Know

Get ahead with TechUp Labs' productivity tips & latest tech trend resources